OpenCV学习 (English Version)

本文最后更新于:2023年1月24日 14:22

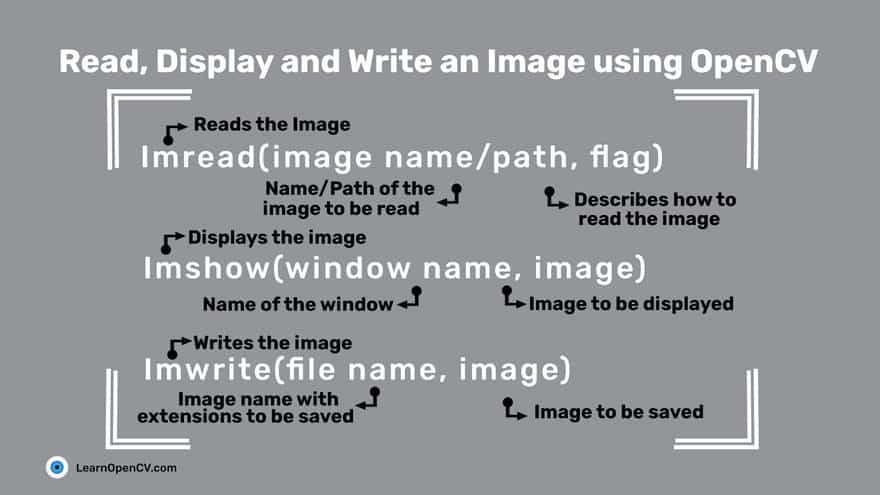

Read, Display and Write an Image

cv2.IMREAD_UNCHANGEDor-1cv2.IMREAD_GRAYSCALEor0cv2.IMREAD_COLORor1

1 | |

Reading and Writing Videos

reading

From a file

1 | |

cv2.VideoCapture– Creates a video capture object, which would help stream or display the video.cv2.VideoWriter– Saves the output video to a directory.- In addition, we also discuss other needed functions such as

cv2.imshow(),cv2.waitKey()and theget()method which is used to read the video metadata such as frame height, width, fps etc.

get()cv2.VideoCapture.get(0) 视频文件的当前位置(播放)以毫秒为单位

cv2.VideoCapture.get(1) 基于以0开始的被捕获或解码的帧索引

cv2.VideoCapture.get(2) 视频文件的相对位置(播放):0=电影开始,1=影片的结尾。

cv2.VideoCapture.get(3) 在视频流的帧的宽度

cv2.VideoCapture.get(4) 在视频流的帧的高度

cv2.VideoCapture.get(5) 帧速率

cv2.VideoCapture.get(7) 视频文件中的帧数

From Image-sequence

1 | |

From a webcam

1 | |

You might be wondering about the flag CAP_DSHOW. This is an optional argument, and is therefore not required. is just another video-capture API preference, which is short for directshow via video input.CAP_DSHOW

writing

step

Retrieve the image frame height and width, using the method.

get()1

2

3

4

5# Obtain frame size information using get() method

frame_width = int(vid_capture.get(3))

frame_height = int(vid_capture.get(4))

frame_size = (frame_width,frame_height)

fps = 20Initialize a video capture object (as discussed in the previous sections), to read the video stream into memory, using any of the sources previously described.

Create a video writer object.

1

output = cv2.VideoWriter('Resources/output_video_from_file.avi', cv2.VideoWriter_fourcc('M','J','P','G'), 20, frame_size)filename: pathname for the output video file

apiPreference: API backends identifier

fourcc: 4-character code of codec, used to compress the frames (fourcc)

AVI:

cv2.VideoWriter_fourcc('M','J','P','G')MP4:

cv2.VideoWriter_fourcc(*'XVID')fps: Frame rate of the created video stream

frame_size: Size of the video frames

isColor: If not zero, the encoder will expect and encode color frames. Else it will work with grayscale frames (the flag is currently supported on Windows only).

Use the video writer object to save the video stream to disk.

1

2

3

4

5

6

7

8

9

10while(vid_capture.isOpened()):

ret, frame = vid_capture.read()

if ret == True:

output.write(frame)

else:

print('Stream disconnected')

break

vid_capture.release()

output.release()

Errors

reading

While reading frames it can throw an error if the path is wrong or the file is corrupted or frame is missing.

writing

- Most common are frame size error and api preference error.

- If the frame size is not similar to the video, then even though we get a video file at the output directory, it will be blank.

- If you are using the NumPy shape method to retrieve frame size, remember to reverse the output as OpenCV will return height x width x channels.

- If it is throwing an api preference error, we might need to pass the

CAP_ANYflag in theVideoCapture()argument. It can be seen in the webcam example, where we are usingCAP_DHOWto avoid warnings being generated.

Resizing

When resizing an image:

- It is important to keep in mind the original aspect ratio of the image (i.e. width by height), if you want to maintain the same in the resized image too.

- Reducing the size of an image will require resampling of the pixels.

- Increasing the size of an image requires reconstruction of the image. This means you need to interpolate(插值) new pixels.

Width and Height

1 | |

Scaling factor

1 | |

Interpolation Methods

INTER_AREA:INTER_AREAuses pixel area relation for resampling. This is best suited for reducing the size of an image (shrinking). When used for zooming into the image, it uses theINTER_NEARESTmethod.INTER_CUBIC: This uses bicubic interpolation for resizing the image. While resizing and interpolating new pixels, this method acts on the 4×4 neighboring pixels of the image. It then takes the weights average of the 16 pixels to create the new interpolated pixel.INTER_LINEAR: This method is somewhat similar to theINTER_CUBICinterpolation. But unlikeINTER_CUBIC, this uses 2×2 neighboring pixels to get the weighted average for the interpolated pixel.INTER_NEAREST: TheINTER_NEARESTmethod uses the nearest neighbor concept for interpolation. This is one of the simplest methods, using only one neighboring pixel from the image for interpolation.

1 | |

Cropping

Basic Cropping

1 | |

Dividing Into Small Patches

1 | |

Rotation and Translation

Rotation

1 | |

The getRotationMatrix2D() function takes the following arguments:

center: the center of rotation for the input imageangle: the angle of rotation in degreesscale: an isotropic scale factor which scales the image up or down according to the value provided

The following are the arguments of warpAffine() function:

src: the source mageM: the transformation matrixdsize: size of the output imagedst: the output imageflags: combination of interpolation methods such as INTER_LINEAR or INTER_NEARESTborderMode: the pixel extrapolation methodborderValue: the value to be used in case of a constant border, has a default value of 0

需要注意的是图像宽高一定为整数,因此dsize要加上int()将值改为整数,保证类型正确,否则将会出现如下错误。

1 | |

Translation

Translation (平移)

1 | |

Annotating

Color Line

1 | |

point(x, y):

- The x-axis represents the horizontal direction or the columns of the image.

- The y-axis represents the vertical direction or the rows of the image.

所以上面画的是水平线

Outlined Circle

1 | |

这个linetype参数并不是指线型是实线、虚线还是点画线,这个参数实际用途是改变线的产生算法。

Filled Circle

1 | |

Rectangles

In the rectangle() function, you provide the starting point (top left) and ending point (bottom right) for the corners of the rectangle.

1 | |

Ellipses

pass

Half-Ellipses

pass

Text

1 | |

org specifies the starting location for the top left corner of the text string.

OpenCV supports several font-face styles from the Hershey font collection, and an italic font as well.

Color spaces

RGB Color Space

The RGB colorspace has the following properties

- It is an additive colorspace where colors are obtained by a linear combination of Red, Green, and Blue values.

- The three channels are correlated by the amount of light hitting the surface.

the inherent problems associated with the RGB Color space:

- significant perceptual non-uniformity.

- mixing of chrominance ( Color related information ) and luminance ( Intensity related information ) data.

LAB Color-Space

- L – Lightness ( Intensity ).

- a – color component ranging from Green to Magenta(洋红色).

- b – color component ranging from Blue to Yellow.

properties

- Perceptually uniform color space which approximates how we perceive color.

- Independent of device ( capturing or displaying ).

- Used extensively in Adobe Photoshop.

- Is related to the RGB color space by a complex transformation equation.

1 | |

YCrCb Color-Space

pass

HSV Color Space

- H – Hue 色相 ( Dominant Wavelength ).

- S – Saturation 色相 ( Purity / shades of the color ).

- V – Value ( Intensity ).

1 | |

- There is drastic difference between the values of the red piece of outdoor and Indoor image. This is because Hue is represented as a circle and red is at the starting angle. So, it may take values between [300, 360] and again [0, 60].

segmentation

simplest way

Data Analysis for a Better Solution

Filtering

Image Filtering Using Convolution in OpenCV | LearnOpenCV #

pass

Thresholding

Binary Thresholding

Binary Thresholding ( THRESH_BINARY )

1 | |

Inverse-Binary Thresholding

Inverse-Binary Thresholding ( THRESH_BINARY_INV )

1 | |

Truncate Thresholding

Truncate Thresholding ( THRESH_TRUNC )

1 | |

Threshold to Zero

Threshold to Zero ( THRESH_TOZERO )

1 | |

Inverted Threshold to Zero

Inverted Threshold to Zero ( THRESH_TOZERO_INV )

1 | |

Blob Detection

pass

Edge Detection

pass

Mouse and Trackbar

pass

Contour Detection

Simple Background Estimation

Deep Learning with OpenCV DNN

1 | |